- designing audio-reactive generative music visualizations

- building custom software and/or hardware to facilitate performances

- exploring how music, code, & design interact

Current ToolKit:

Max/Msp, OpenFrameworks, VDMX, custom ISF shaders, MIDI Controller

About My Work

I've been creating live music visualizations & collaborating with musicians for performances since 2005. Below is a list of projects I've designed, performed and built.

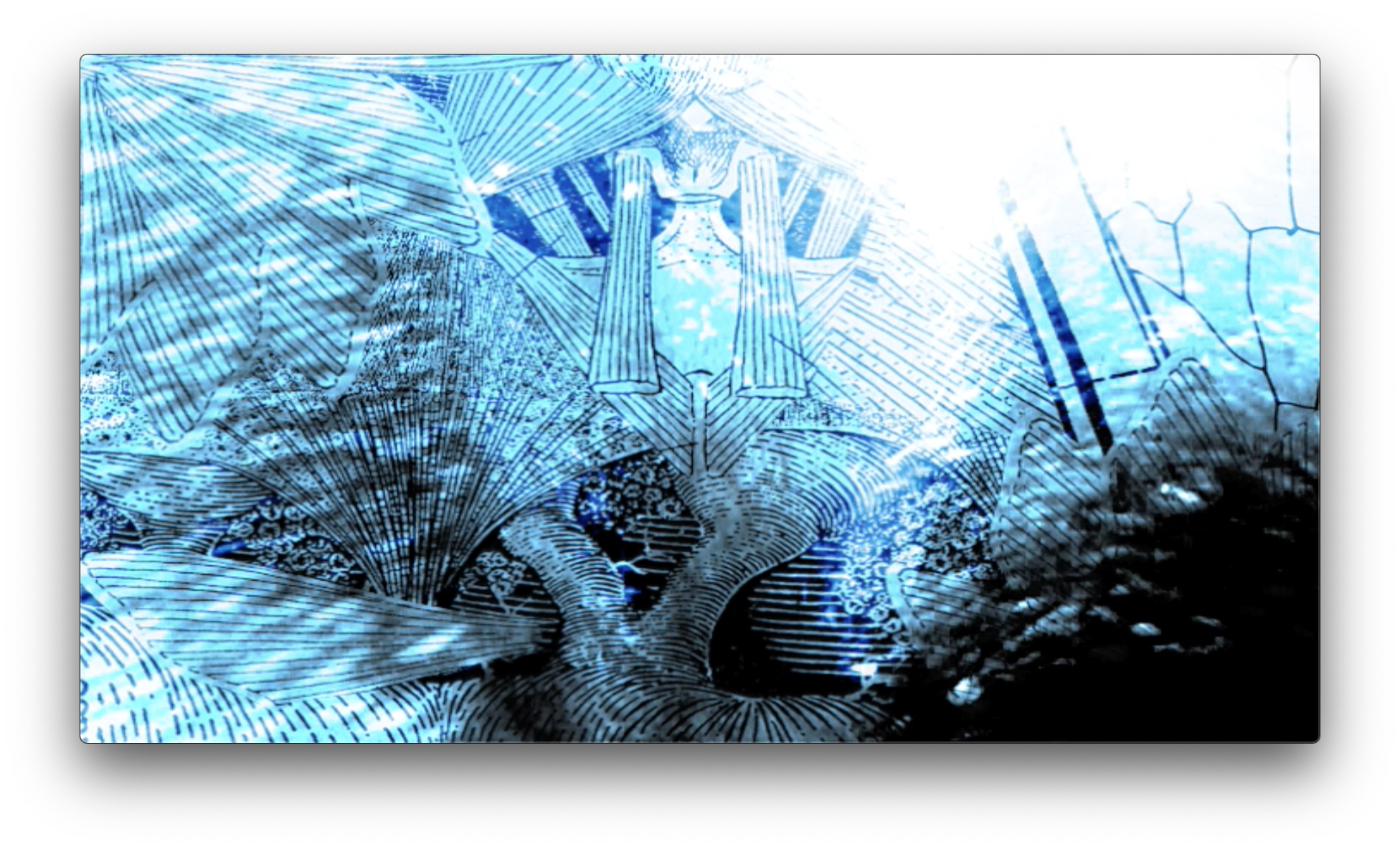

These visuals work by combining machine learning generated textures with super-fast, custom audio-reactive shaders in VDMX. With this approach I've been designing visually dense, very responsive visual canvases for compositions on the heavier/dense end of the audio spectrum.

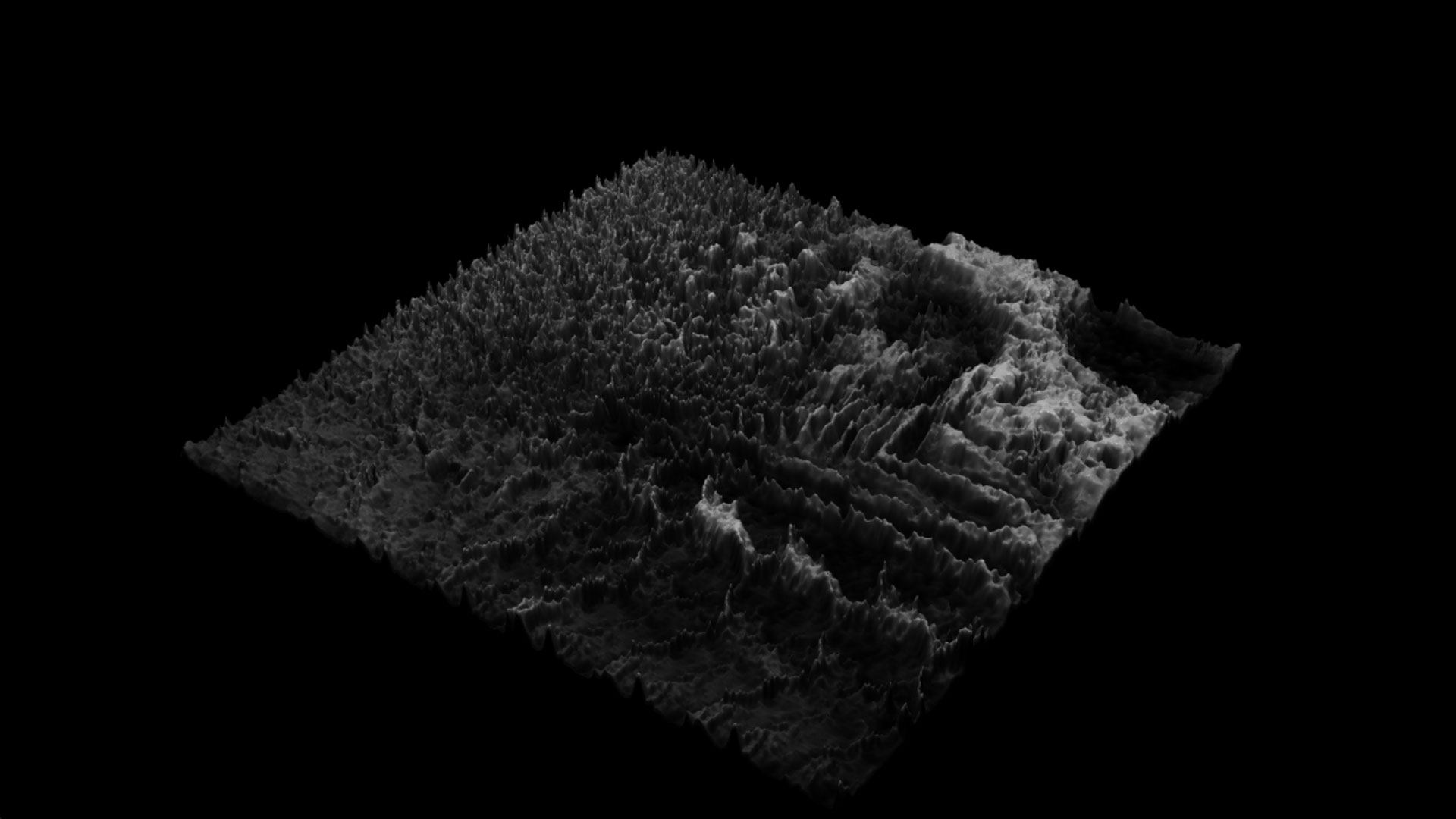

Custom machine-learning generated textures

To create these images I use DCGAN Machine Learning to explore the latent space of visual possibilities within a dataset of images all representing a wide array of 'textures'.

This is a basic ML technique to generate images, and is essentially the same as this project if you'd like to dig a little deeper. The resulting textures are used as image sources.

More examples:

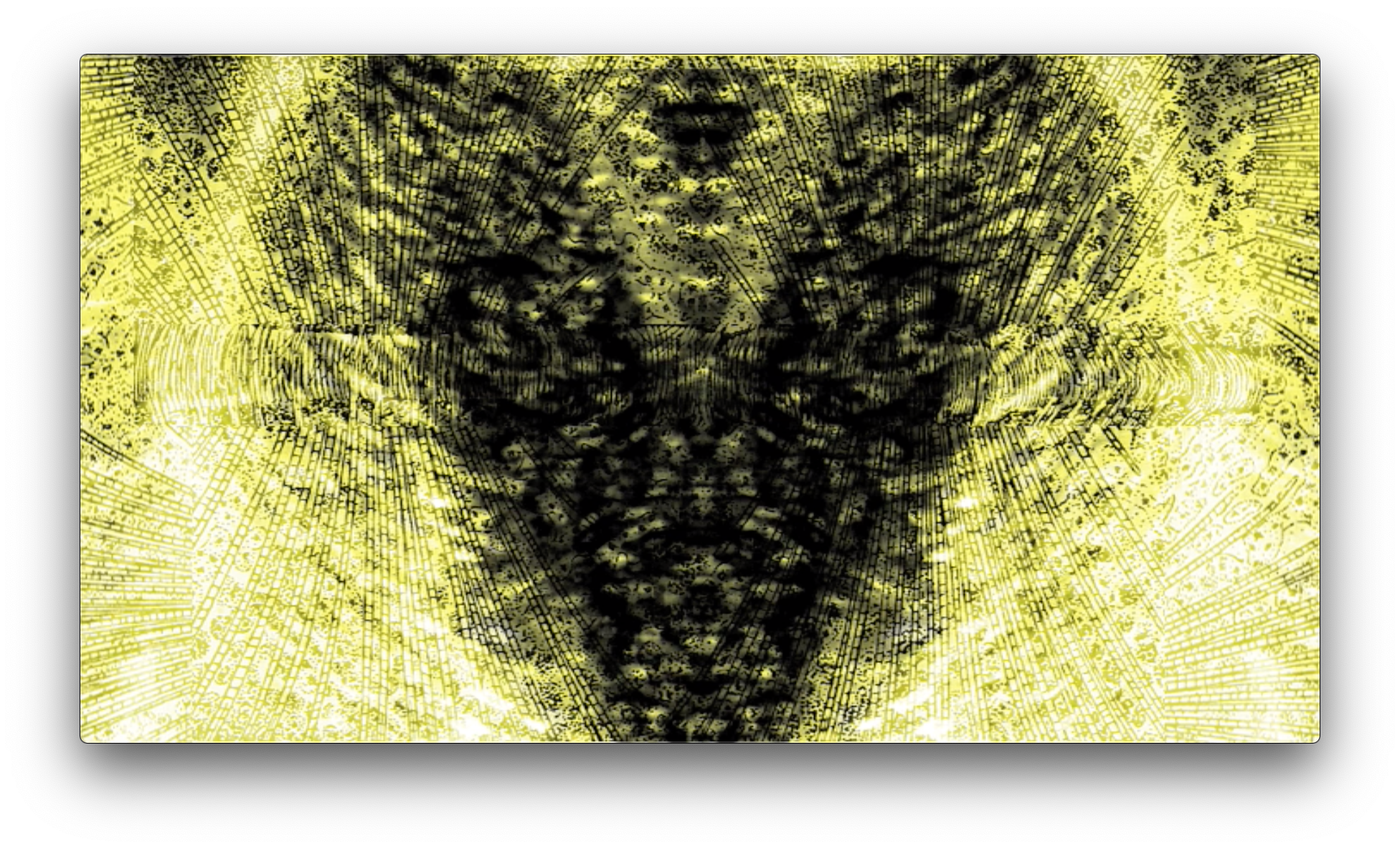

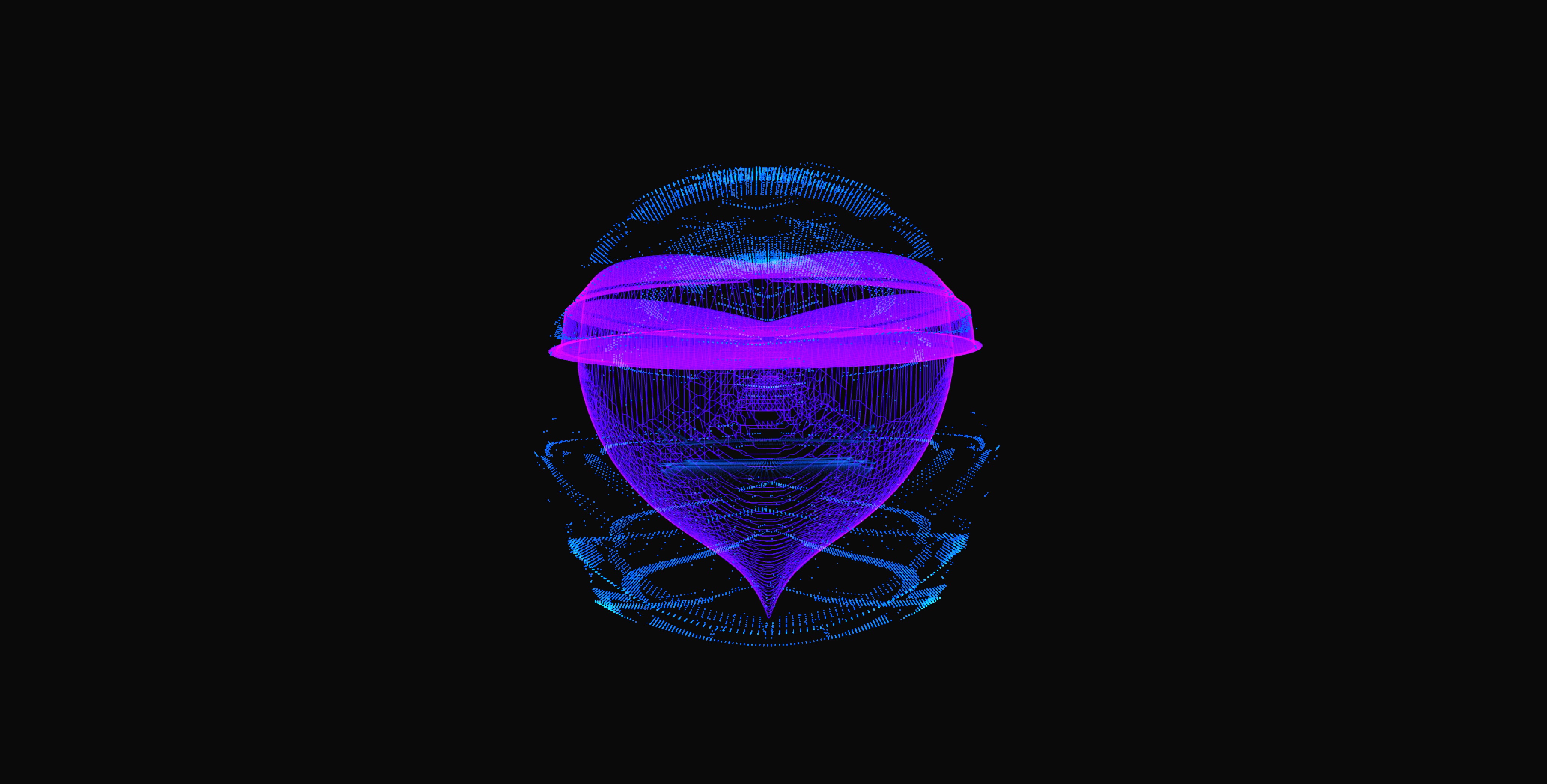

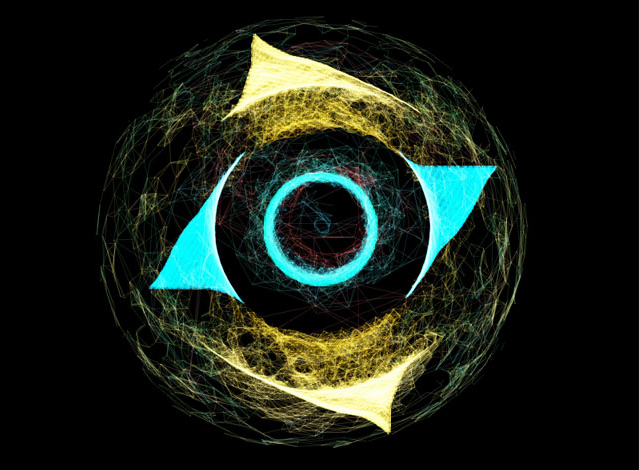

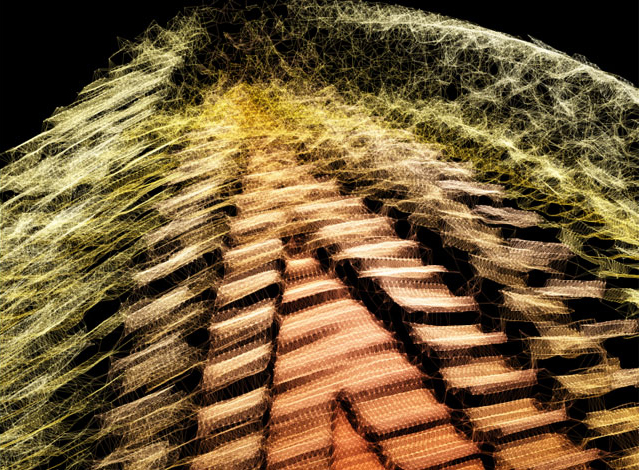

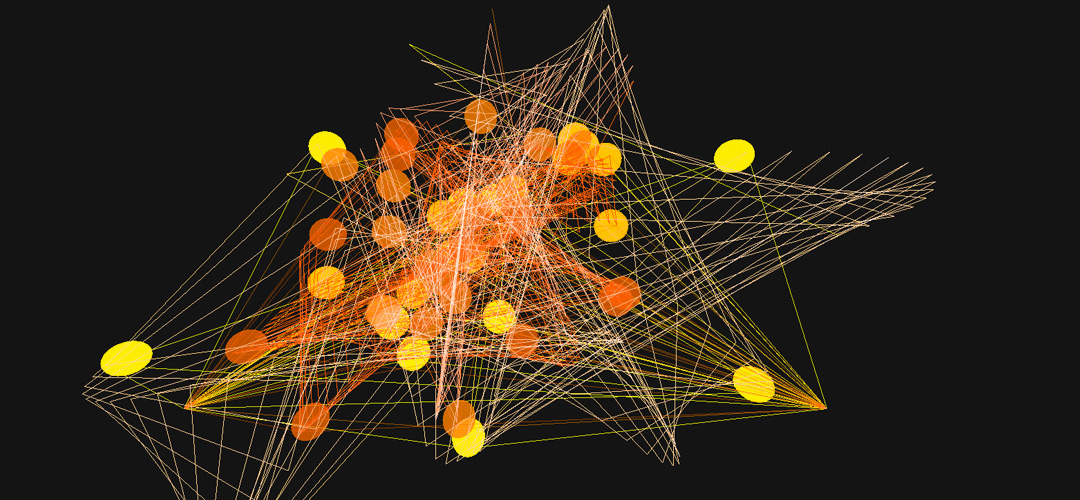

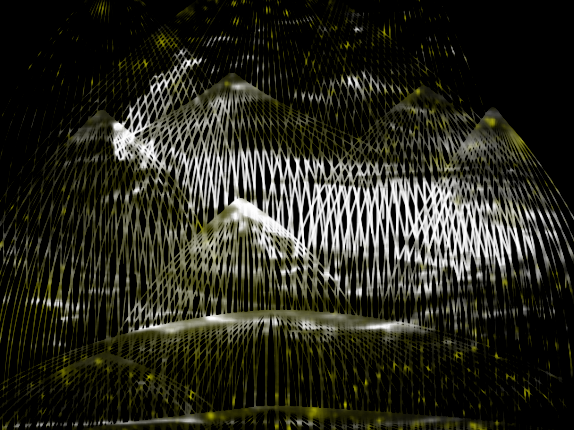

Mesh-based audio reactive visuals

A system created for my own music projects, these visuals are generated using a custom openFrameworks app directly inputing a multi-channel audio signal and using it to manipulate the points on complex meshes. The 3D meshes are generated by OF from images I designed in 2D and then translate into 3D space, allowing for a light, fast, flexible workflow and perfomance.

More examples:

I had the opportunity to explore music visualization as a masters thesis at Parson's the New School for Design while studying there from 2011-2013.

Here I started working with generative music visual concepts, exploring how music & code interact and building experimental hardware / software for music visualizations.

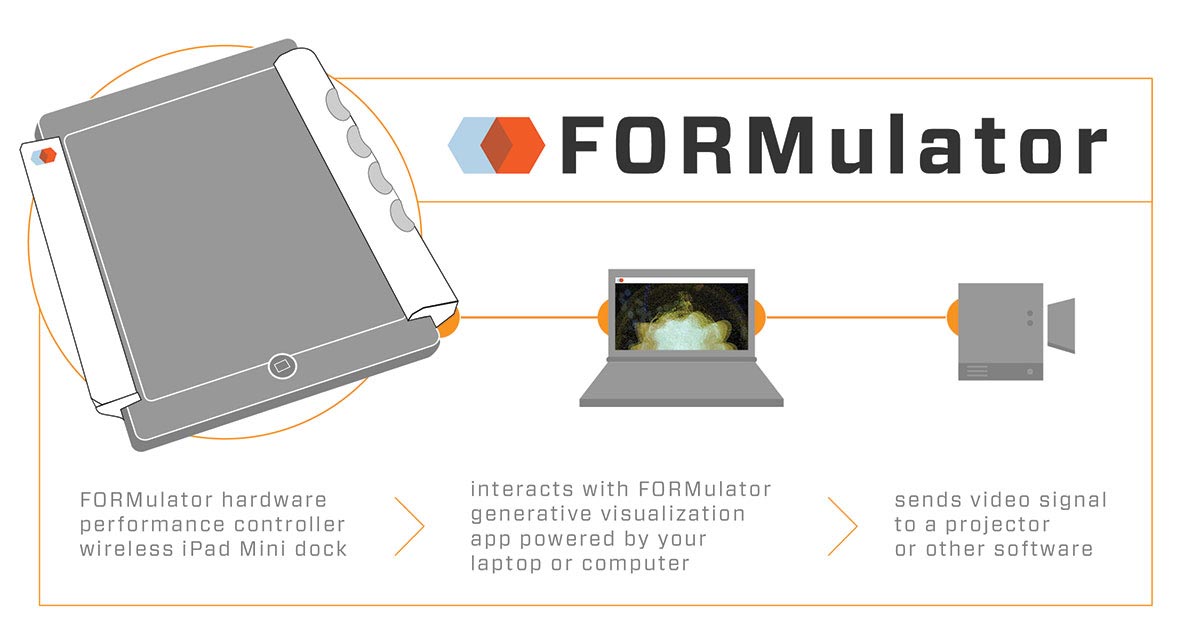

Formulator

• self-initiated / MFA Design & Technology Thesis project for Parsons the New School for Design

• Music Driven Generative Visualization Software/Hardware Platform

Project Details

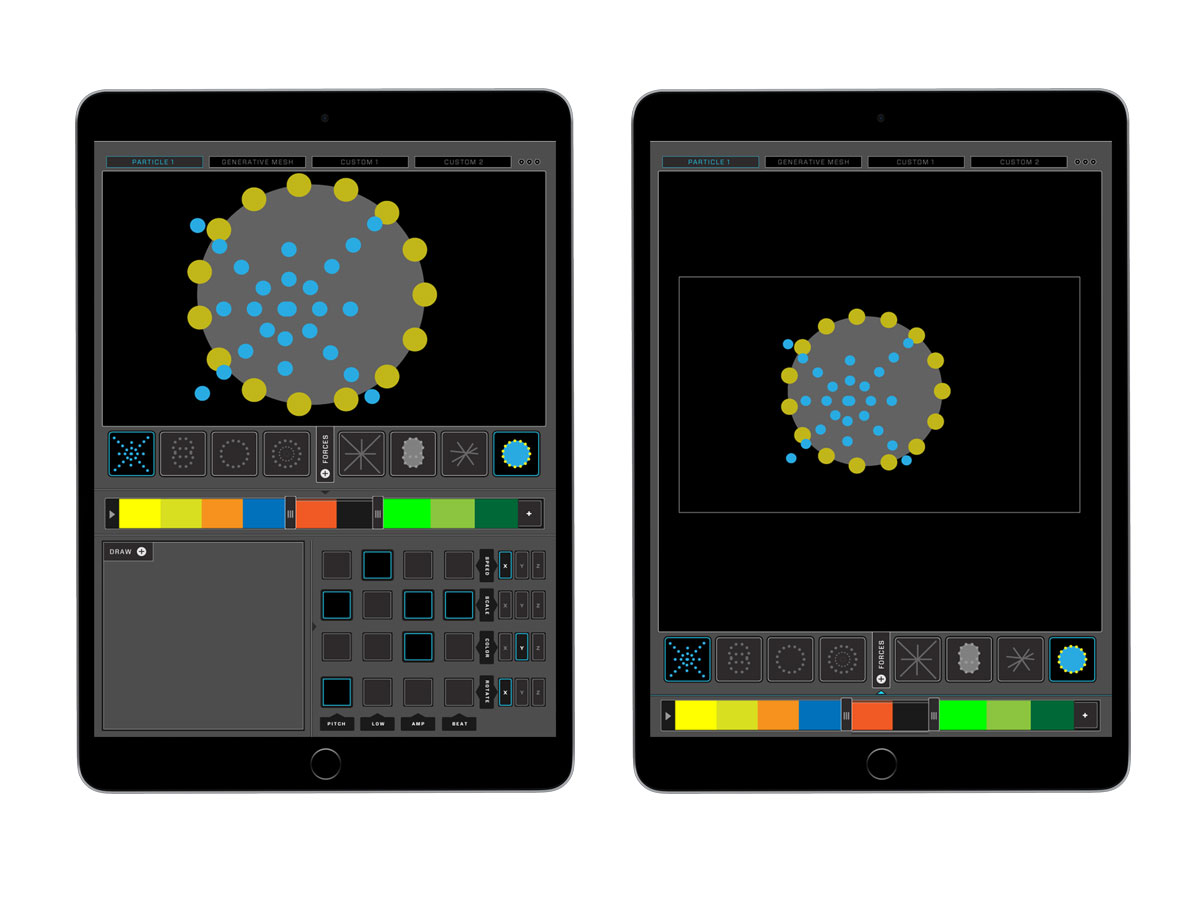

• design & coding of hardware + software system (Max MSP / OSC / Openframeworks)

• test & prototyping of physical & digital interfaces

• visual design of music visualization

• design of supporting materials / documentation & thesis writing

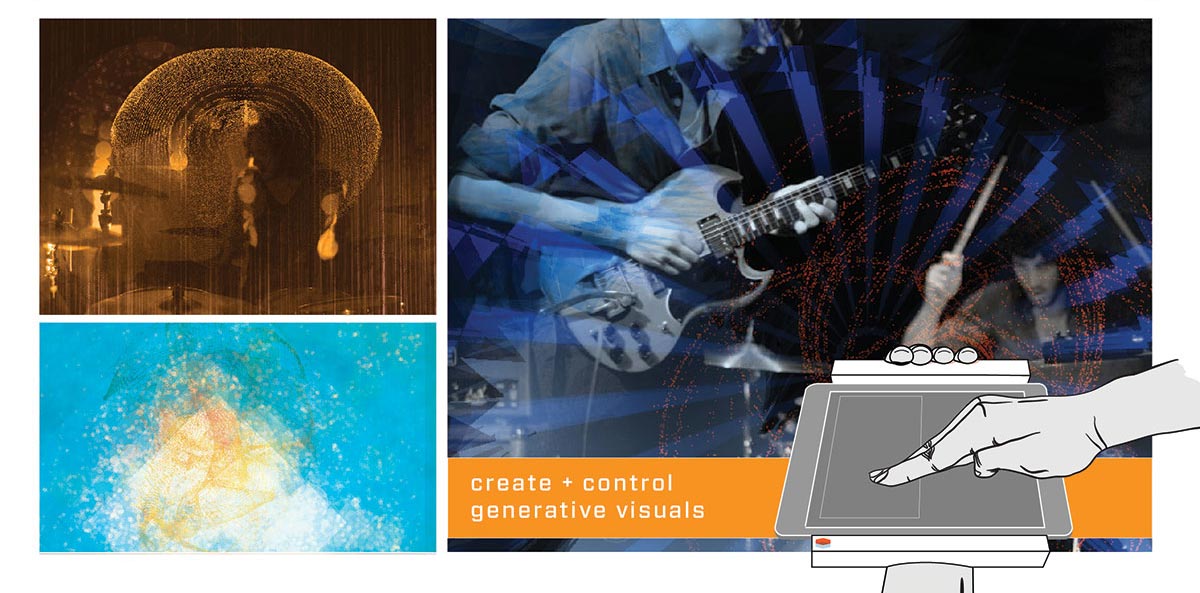

An ergonomic live performance tool for visual artists enabling gestural control of generative, audio-reactive music visualizations.

Designed as a tool for visual artists working with live musicians, it enables its user to create and control generative visuals synced to and driven by music. Allowing the artist to use a live audio signal as painter would use paint for their canvas.

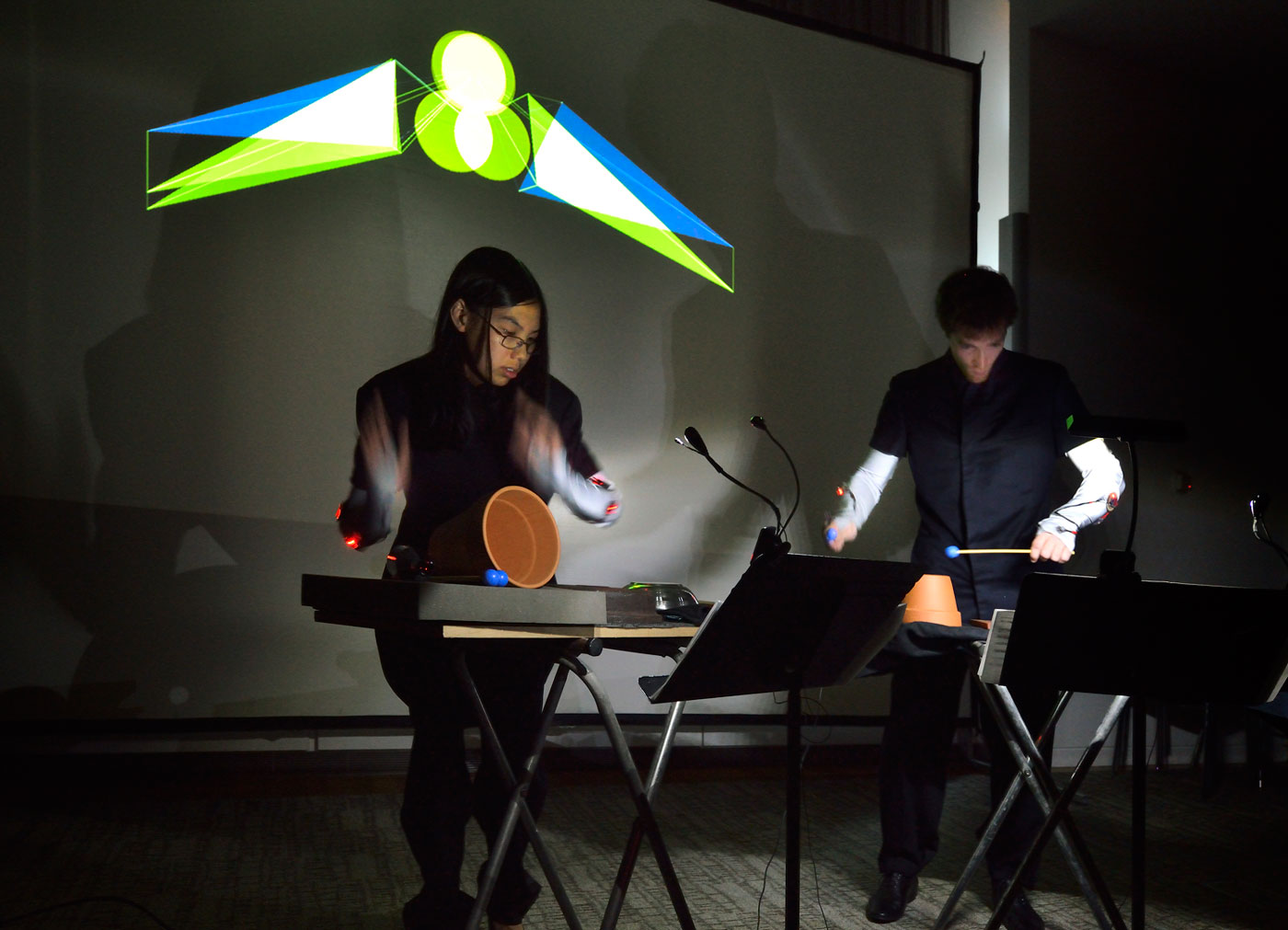

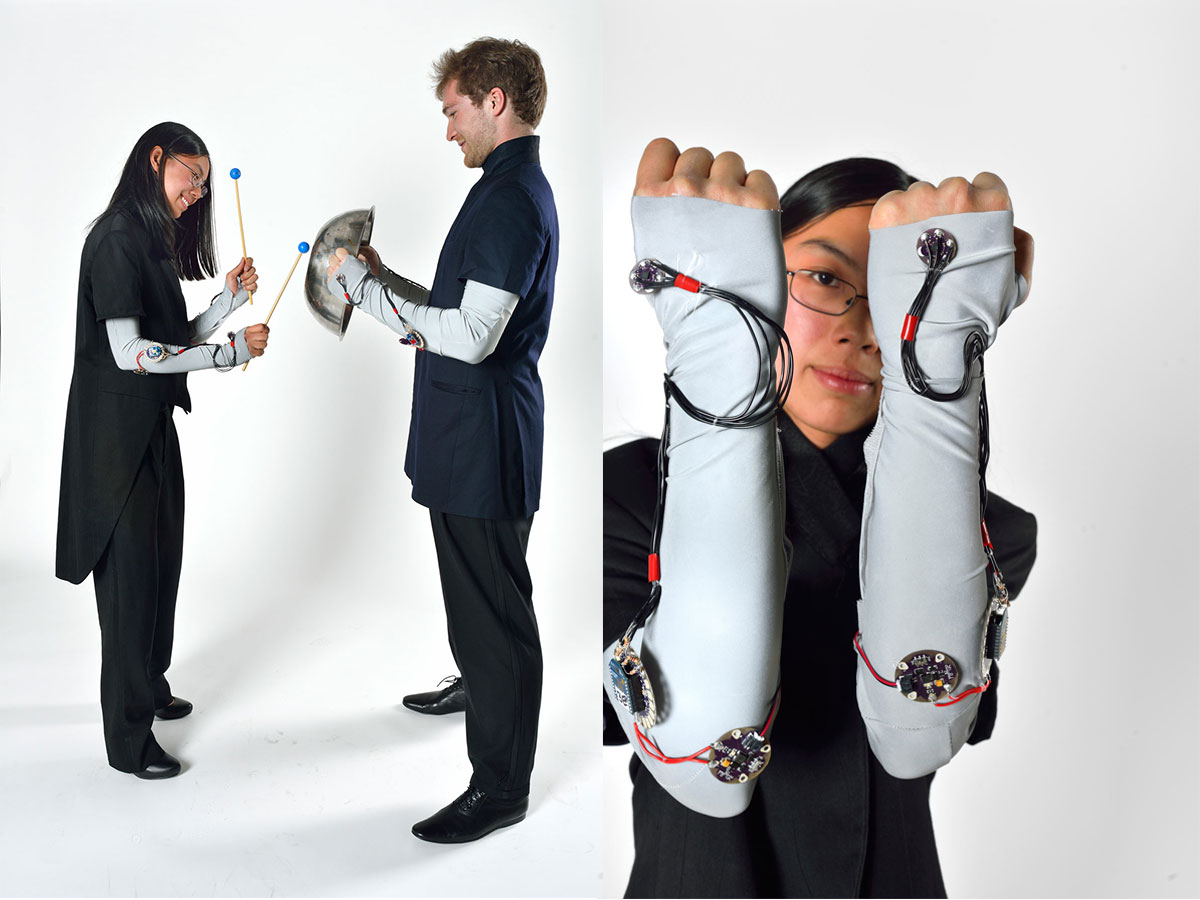

The Future of Orchestral Garments

A project in collaboration with the Mannes School of Music & Parsons MFA Fashion department.

Here I designed visuals to work with a custom-made, ergonomic smart glove made for capturing and displaying the data from the physical movements of an orchestral percussionist’s performance; using the data to drive the live music visualization.

Together with my collaboration partner Ross Leonardy who assisted with hardware, we designed and developed a pair of wireless, sensor embedded gloves to visualize and capture data from an orchestral percussionists movements. The process started with user research via the Baltimore Symphony Orchestra and proceeded with prototyping, testing and iterations. The project concluded with a live public performance pictured below.

My contribution:

• concept, design & coding of hardware + software system

• user research, testing, & prototyping

• visual design of music visualization

• design of supporting materials / documentation & information graphics

Big Bang / Visual Music Collaboration w/ Mannes School of Music & Jovan Johnson

As a duo consiting of myself and a very talented horn player Jovan Johnson, we created a collaboratively written / designed piece of visual music. Performed live using a loop pedal & custom made hardware device controlling generative music visualizations.

Coded in OpenFrameworks & Max MSP and performed using a prototype of my music driven generative visualization system.

I started creating music visualizations with various artist in the Denver underground music scene around 2005. These live experimental performances were rarely captured, but a few remaining artifacts still exist below! (please don't mind the pixels...)

Autokinoton

Live projections peformed with heavy / progressive / instrumenal music grou. Live performance using Modul8 with sampled and shot video loops edited with AE & digital collage. Also contributed graphic design, screen printed posters, music videos.

The Horace Van Vaughn

Live projections performed alongside heavy instrumenal / improv Denver music collective. Live performance using Modul8 with sampled and shot video loops edited with AE & digital collage.

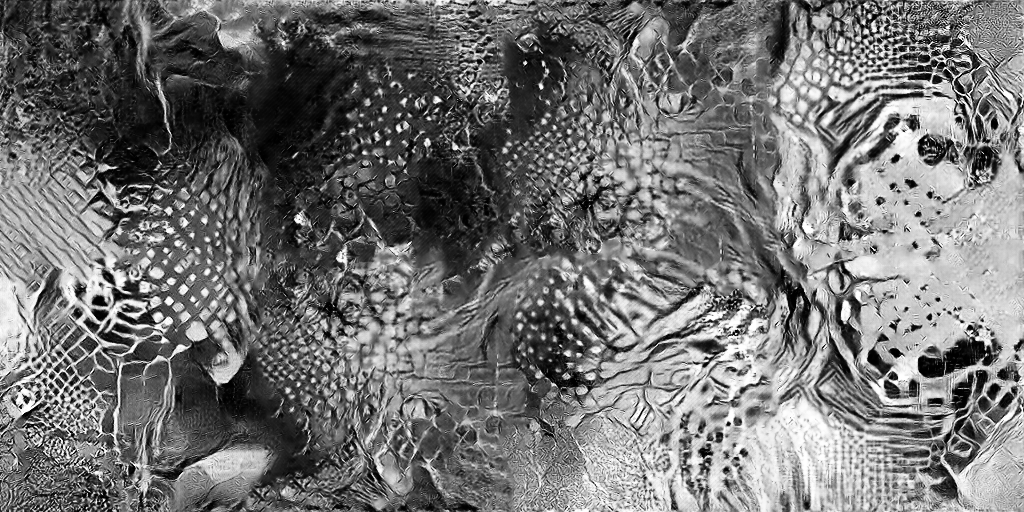

Cult of the Supreme Being

Live, MIDI controlled visual projection performed alongside heavy improvisational drone & as part of a collaborative trio. Performances used Modul8 with an sources composed of sampled and shot video loops edited with AE. Visual concept was to collage together different organic forms and movement derived from nature.